Language Tribes and AI: Why LLMs Fall Short of Capturing Our True Colors

and why language is not just a mathematical construct.

I am a huge soccer fan and as a follower of french soccer, I am a regular listener to THE podcast about soccer: “L’After foot”. So, when I type the question “l’important c’est ? “ to ChatGPT, I am not expecting a long dissertation about different tactics, approaches to soccer, art of teamwork, sportsmanship, …

No, the only answer I want to get is the one that resonates with every french soccer aficionado : “les 3 points”. ( the three points is what the team scores when winning the game)

“L’important c’est les 3 points ” is an iconic opener for the After foot radio show and a close to cult mantra.

Distributional semantics

You might be wondering?: what does it have to do with LLMs, ChatGPT and the broader landscape of generative AI ?

At the core of LLMs is this idea that words are defined by their semantic and that semantic can be mathematically coded using the principal of distributional structure ( pioneered by Zellig Harris in 1954).

By analyzing vast amounts of text data, these models learn to associate words with similar meanings based on their contextual usage.

To make it simple, imagine the internet with a large number of sentences :

"The dog eats its kibbles."

"The cat eats its kibbles."

"I am taking the dog to the vet."

"I am taking the cat to the vet."

"My favorite pet is dogs."

"My favorite pet is cats."

In this massive collection of sentences, “dog” and “cat” are frequently used in the same context, therefore they are “semantically close” words and they belong to the same category.

This is how LLMs and ChatGPT understand language.

Symbolic vs Semantic vs Tribal

Where am I going with all this?

My point is that language is more than semantic.

First, there is a symbolic aspect of language. Symbolic language involves layers of meaning that go beyond the literal, often tied to cultural or historical contexts. For instance, the color red is associated to revolution for many. Similarly, sentences about a black swan (cygne noir) and “un aigle noir” have nothing to do with birds. GPT does a reasonably good job at capturing this symbolic aspect. GPT (click) got the “cygne noir” part correctly.

But beyond the symbolic, there is another facet of language which I define as tribal.1

Humans do use language to create a sense of belonging and identity. They use it as a mean to associate themselves to groups & tribes and differentiate themselves to the rest of humanity.

Sometimes people do this to resist (minorities and people under oppression are forced to invent their own code and language), more often they do this to exist…

The objective of language is, in that case very similar to a deliberate choice of clothing or style.

emo, sporty, boho

The same way people would create and adopt a dress code, they invent a particular slang sometimes going beyond lexical distorsion and changing the syntax.

Why is it an issue for LLMs

The challenge for LLMs in capturing the "tribal" aspect of language is significant. The vast and diverse nature of human language, coupled with the pace at which communities innovate and adapt their linguistic expressions and slang makes it close to impossible for these models to keep up.

"Humans will always alter language faster than AI will comprehend it" (own citation)

LLMs are trained on an immense corpus of data: the internet and other corpuses. They kind of summarize it in a low resolution version. They may expand to trillions of parameters, it’s not gonna be enough to capture the finesse of the millions of “slangs” out there.

Already the French language is underrepresented on the net and represent only about 5% of the corpus.

So, if you take the “french soccer fan community” (~ 14 million people listening monthly to this podcast). These millions soccer fans rarely write more than a tweet/insta post every once in a while …. there is no way any model, whatever the size would get the granularity to capture the finesse of the “after foot fans slang”. The volume of content produced and contributed to LLMs is just a drop in the internet.

The implications are much wider than soccer! [yeah that’s just a game … they say].

I recently worked with a retailer for teenagers and it’s a major challenge for them to build a chatbot that respond with the right tone, a model that create marketing messages that are cool, trendy and current with the fast-paced world of teen fashion.

Glimpse of hope, Social data

Although the picture is gloomy, there are options to capture the lingua of tribes.

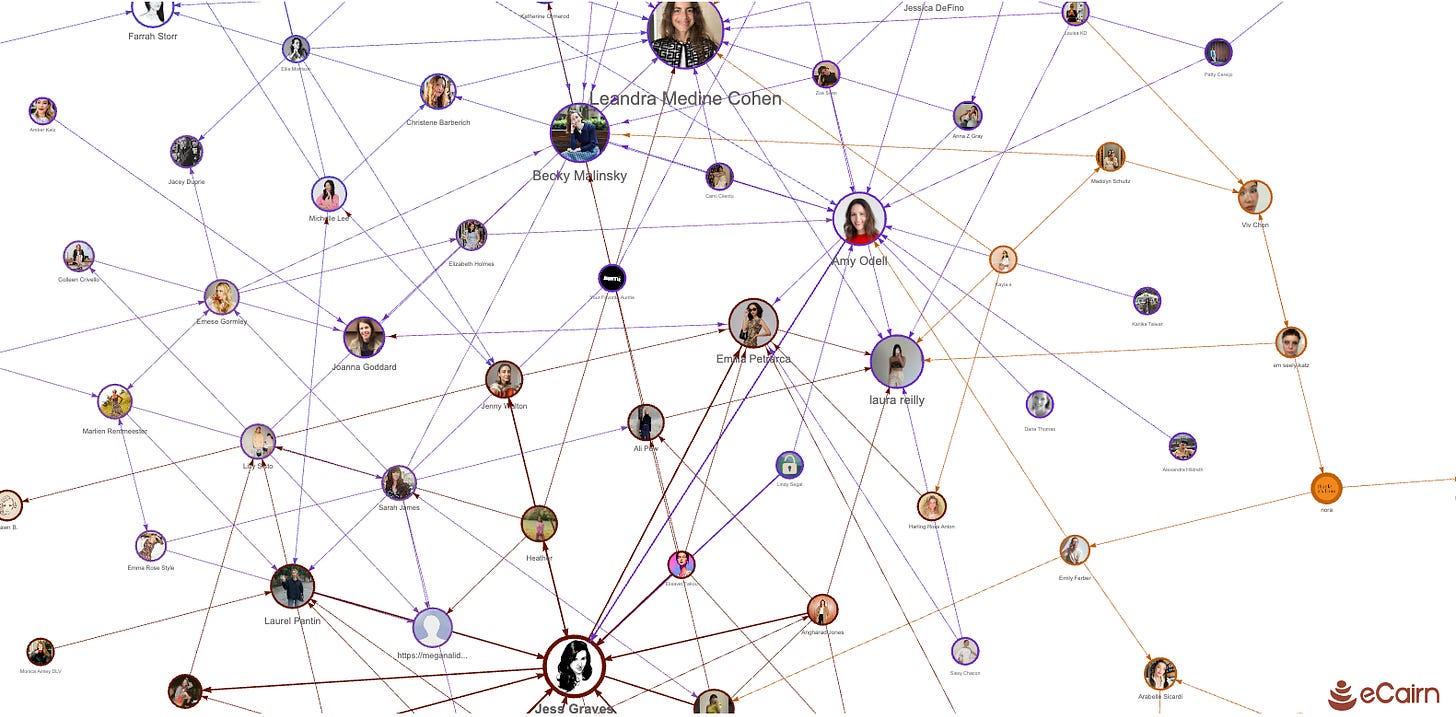

Our strategy (at eCairn) is to source content right from the social media platforms.

We do it is by mapping the influencers ( thousands of them) and content creators in a tribe, collecting the content they write and enriching models using RAG (Content Injection) and fine-tuning techniques.

Fashion influencers on substack

We will elaborate this technique in a subsequent post. However if you’re interested in how we do this, just contact us!

Conclusion

ChatGPT is relatively new and even if millions of people are using it, we, as a society have not yet adapted to a world where a lot (the majority?) of content is generated by AI.

I bet that humans (us) will not be satisfied by this new content/information landscape and we will react.

We need linguistic diversity. We speak many subculture languages and we want to continue doing so.

I bet we will increase the pace at which we build slangs and tribal languages not only to set up apart from the mass but also to differentiate us from AI.

The future is linguistic diversity.

Recognizing individuals based on the tribal language they use is so pivotal that we have developed a patented methodology for it